Video Agents: When AI Learns to See and Act in the Physical World

For decades, cameras in industrial settings have been passive observers—recording footage that humans review hours or days later. But what if your cameras could understand what they're seeing and act on it in real time?

This is the promise of Video Agents: AI-powered systems that combine Vision Language Models (VLMs) with automation workflows to create intelligent observers that can reason about the physical world and trigger meaningful actions.

The Problem with Traditional Video Monitoring

Consider a manufacturing floor with 50 cameras monitoring safety compliance. Today, this typically means:

- Massive storage costs for video archives

- Human operators reviewing footage reactively

- Delayed response to safety violations

- Inconsistent enforcement based on human attention spans

The footage exists, but the intelligence doesn't. Cameras see everything but understand nothing.

Enter Vision Language Models

Vision Language Models represent a fundamental shift. Unlike traditional computer vision that requires custom training for every specific object or scenario, VLMs can reason about images using natural language.

Instead of training a model to detect "hard hats" specifically, you can simply ask:

"Is this person wearing appropriate safety equipment for a construction site?"

The model understands context, nuance, and can adapt to scenarios it was never explicitly trained on.

From Seeing to Acting: The Edge-to-Cloud Pipeline

At Cyberwave, we've built the infrastructure to turn any camera into an intelligent agent. Here's how it works:

1. Connect Any Camera

Using our Edge SDK, any camera—from a $20 webcam to an industrial PTZ unit—becomes a smart sensor. The SDK handles:

- Secure WebRTC video streaming

- MQTT control channels

- Automatic reconnection and failover

2. Create a Digital Twin

Every physical camera gets a Digital Twin in Cyberwave. This virtual representation allows you to:

- Monitor live feeds from anywhere

- Store and retrieve frames programmatically

- Integrate with AI models and workflows

3. Build Intelligent Workflows

Here's where the magic happens. With Cyberwave Workflows, you can chain together:

- Data ingestion from camera feeds

- VLM analysis to understand what's happening

- Conditional logic to filter for events that matter

- Actions like sending alerts, triggering alarms, or controlling other systems

No backend code required. Just drag, drop, and deploy.

Real-World Example: The Active Safety Officer

Let's make this concrete. Imagine you're running a manufacturing facility where PPE compliance is critical. Here's how you'd build an automated safety system:

The Setup:

- A camera watching a work zone

- A VLM workflow running every minute

- Email alerts for violations

The Logic:

IF person detected in frame:

IF NOT wearing (hard hat AND safety vest):

→ Send alert to safety manager

→ Log violation with timestamp and image

The Result:

- Zero manual monitoring required

- Immediate alerts when violations occur

- Complete audit trail with visual evidence

- Consistent enforcement 24/7

The system doesn't get tired, doesn't get distracted, and doesn't miss violations because it was checking another screen.

Beyond Safety: What Video Agents Can Do

PPE compliance is just one application. Video Agents can transform any scenario where visual understanding drives action:

Quality Control

- Detect defects on production lines in real time

- Flag anomalies that deviate from reference images

- Automatically route defective items for inspection

Inventory Management

- Monitor stock levels on shelves or pallets

- Trigger reorder workflows when supplies run low

- Track asset movement through facilities

Security and Access Control

- Identify unauthorized access attempts

- Detect suspicious behavior patterns

- Integrate with physical access systems

Environmental Monitoring

- Detect spills, leaks, or hazardous conditions

- Monitor equipment status through visual indicators

- Track environmental compliance in real time

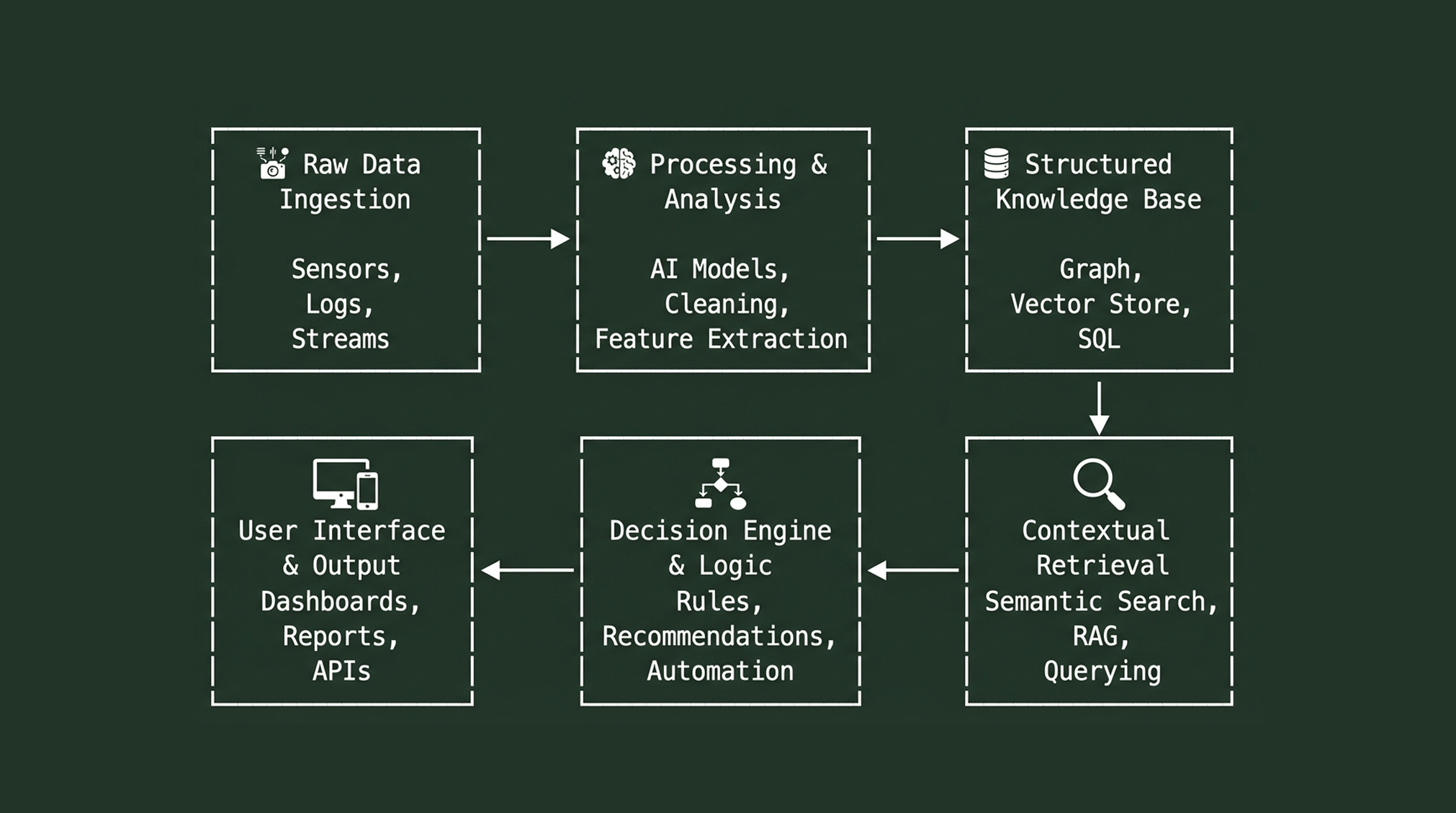

The Technical Architecture

For the engineers in the room, here's what's happening under the hood:

The architecture decouples hardware from intelligence, meaning:

- Swap cameras without rewriting code

- Upgrade AI models without touching hardware

- Scale horizontally by adding more cameras

- Deploy globally with edge-to-cloud flexibility

Getting Started

Ready to turn your cameras into intelligent agents? Here's your path:

- Request Early Access to the Cyberwave platform

- Install the Edge SDK on any Linux/macOS machine with a camera

- Create your first Workflow using our visual editor

- Deploy and iterate as you discover new use cases

We've published a detailed technical tutorial that walks through building a complete PPE compliance system from scratch.

The Future of Physical AI

Video Agents represent a broader shift in how AI interacts with the physical world. We're moving from:

- Reactive to proactive systems

- Human-dependent to human-augmented monitoring

- Siloed cameras to intelligent sensor networks

The cameras are already there. The AI is ready. The only question is: what will you build?

Ready to explore? Join our Discord to connect with builders already deploying Video Agents, or schedule a demo to see the platform in action.